A beginners guide to Docker

How Containerization works with images in docker

Generally, Docker has been overlooked by many new developers. Understanding how containerization helps manage individuals' and teams' projects to deliver multiple applications hassle-free. Docker is an open-source virtualization platform that delivers a set of the platform as a service (PaaS) software in packages called containers and has them run the same way on all your environments including design, testing, staging and production. The software that hosts the containers is called Docker Engine.

Containers vs Virtual Machines(VMs)

Containers and Virtual Machines differ in several ways but the primary difference is virtual machines virtualize an entire machine down to the hardware layers and containers only virtualize software layers above the operating system level which makes it a lighter-weight, more agile way of handling virtualization — since they don't use a hypervisor; virtual machine monitor or VMM is software that creates and runs virtual machines (VMs). A hypervisor allows one host computer to support multiple guest VMs by virtually sharing its resources, such as memory and processing.

What is a Container

A container is a standard unit of software that packages applications with all necessary dependencies and configurations that can be easily shared and moved around, making development more efficient by running quickly and reliably from one computing to another.

Image vs Container

Images are read-only runtime environments of the actual package, an immutable (unchangeable) file that contains the source code, libraries, dependencies, tools, and other files needed for an application. Containers are image instances, where multiple containers of the same image can be run, each in a different state through an isolation process.

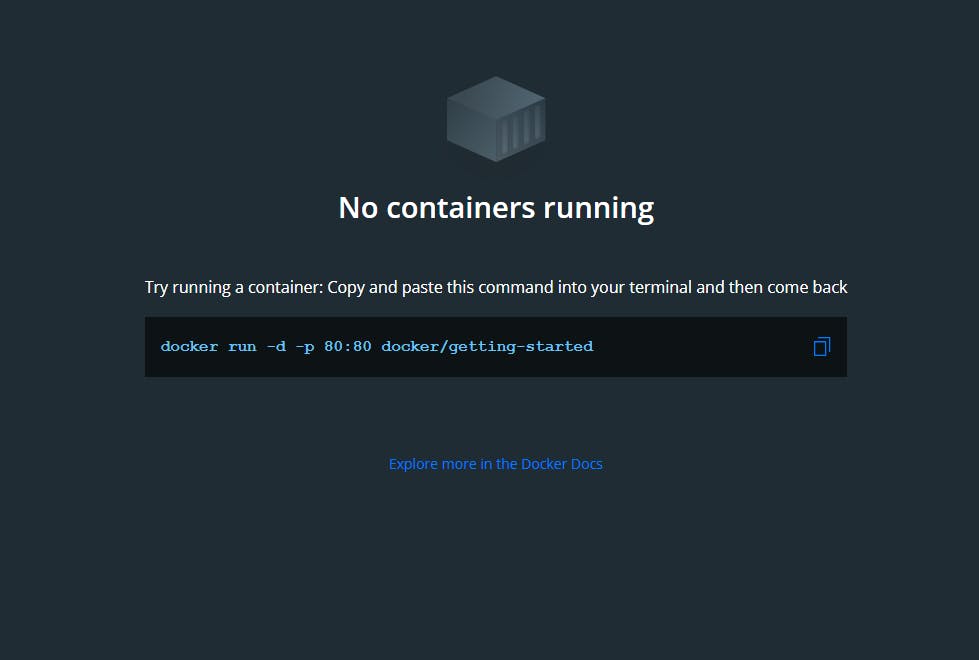

Docker Installation

Installing docker on your computer will be determined by your operating system. Visit the docker official website. In this tutorial, we would be installing for the windows operating system but we have to make sure the windows subsystem for Linux is enabled using WSL as explained on the official website. It is important our system passes the Microsoft prerequisite installation instructions. Once we download and install Ubuntu, we can now download and install our docker and make sure it's running in the background.

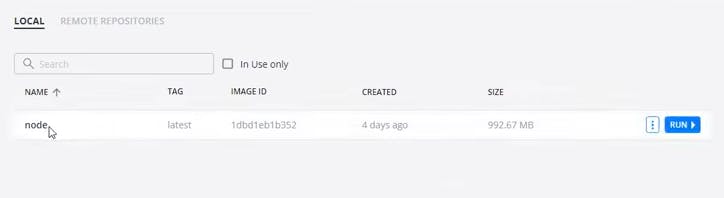

Docker Hub is the online repository for both private and open-source pre-made images. Let's download the official nodejs image by running docker pull node on our terminal, we can always select the version we wish to download. We can now see nodejs docker image in our docker desktop app with the option to run when hovered over.

Creating Dockerfile

A dockerfile is a list of text layer instructions to create an image that runs on the command line.

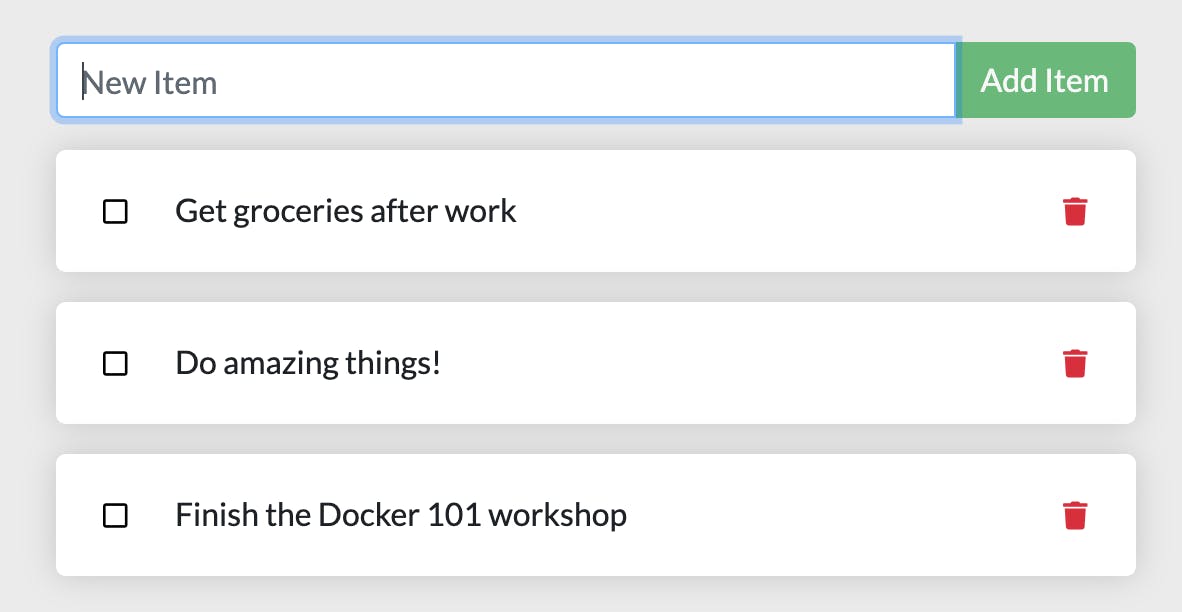

To create a dockerfile, let's

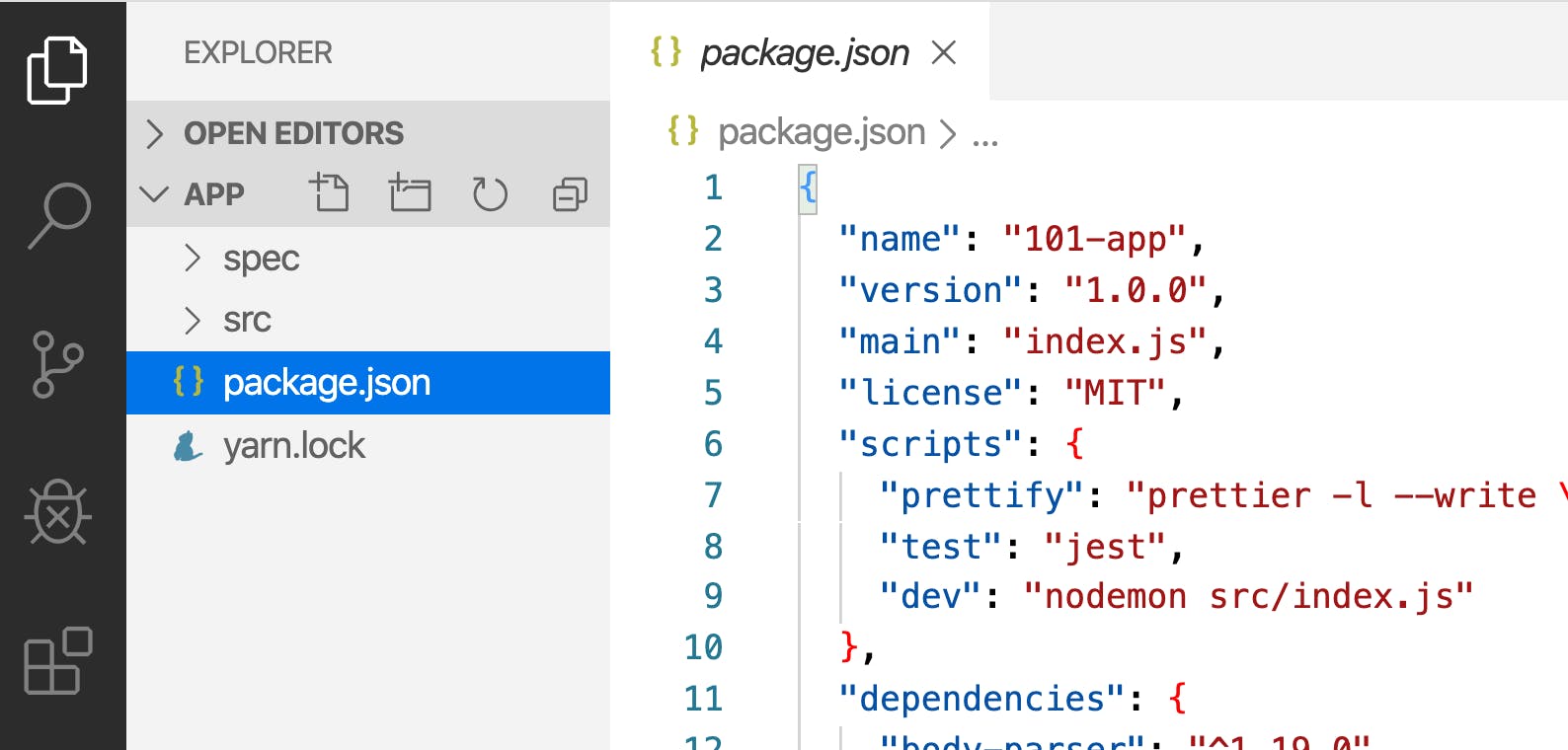

assume we have the application below running on port:5000 with express as a dependency.

We can now create a file called Dockerfile at the root of our project folder that will contain all our image files for our project.

FROM node:17-alpine

WORKDIR /app

COPY . .

RUN npm install --production

CMD ["node", "src/index.js"]

EXPOSE 5000

To create the image run

docker build -t my_image

Our image is now been run in an isolated container with all the necessary dependencies but we can also create a root file .dockerignore and add node_modules to ignore all the node modules that will not be needed hence we are working in containerization.

When we expose our server or container on port:5000, that will only run on the localhost because the port is exposed by the container, not our computer. It's advisable to initiate on the same but you can always change to your suitable port, so that our request can be acceptable on the local machine.

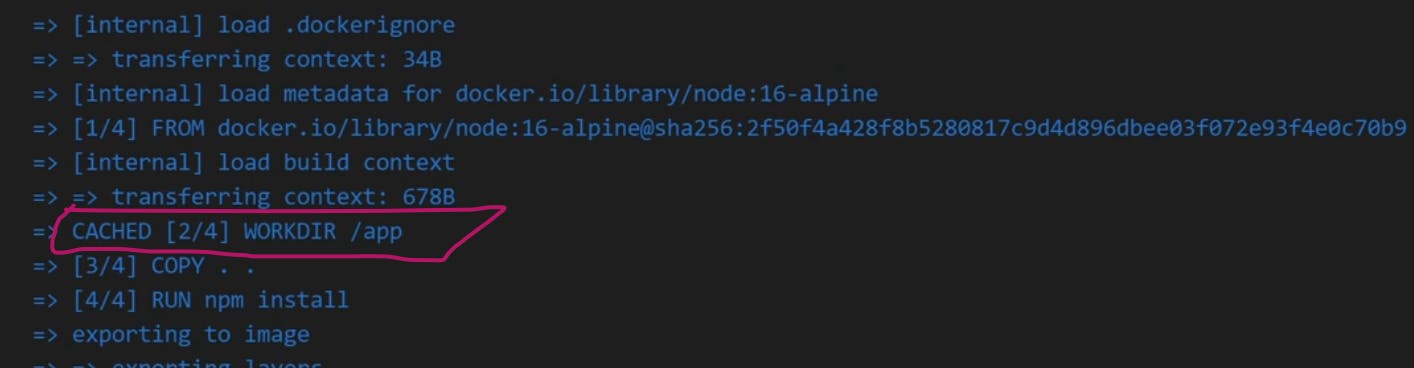

Layer Cashing

Every line we write represents a new layer of image cashing because each line adds something to the image. We have to rebuild our image for every change made in our files because the image is ready only. The first time docker builds our image it stores it in the cache, and when we rebuild our image again the whole process does not run due to the cache.

Managing Docker Images and Containers

Managing our images is very important, especially when so many repeated files have been created. docker images will list all the images we have. dockers ps which stands for docker processes shoe images currently running. docker ps -a shows all the containers. We now run docker rm my_image to remove the my_image container. We may experience an error when an image is currently running but docker rm -f my-image will forcibly remove the image. We can select either container or image by running docker image rm my_image or docker container rm my_image1

Now, we can even remove all our docker images from our system by running docker system prune -a

Volumes

The difference between docker run and docker start is that the docker runs always run the image in a new container in detach mode while the docker start takes from the existing container. Volumes run as a persistent mechanism in our data even when the content of the folder even when the container stops. We can run volumes and tag them with nodemon to help automatically restart our project using the absolute path

docker run --name my_image:nodemon -p 5000:5000 --rm -v C:\Users\Rowjay\Documents\docker-docker run --name my_image:nodemon -p 5000:5000 --rm -v C:\Users\Rowjay\Documents\docker:/index -v /app/node_modules my_images:nodemon

We must make sure nodemon is added to our package.json

"scripts": {

"dev": "nodemon -L index.js"

}

and dockerfile

FROM node:14-alpine

RUN npm install -g nodemon

WORKDIR /app

COPY package.json .

COPY . .

RUN npm install --production

CMD ["rnpm", "run", "dev"]

EXPOSE 5000

Docker Compose

An error can occur when running multiple projects from all that we just did due to the long process involved but this can be solved quickly using docker-compose

We can create docker-compose in the root folder of our folder using docker-compose. yaml and embed the following

version: "5.7"

services:

api:

build: ./hunk

container_name: hunk_c

ports:

- "5000:5000"

volumes:

- ./hunk:index

- ./hunk/node_modules

Simply run docker-compose up to start the project, we can always use docker-compose down --rmi all -v" which will remove and stop all running images and containers.

You can do further research by dockerizing your next project and sharing it on the docker hub for other users.

Below are useful docker commands:

docker pull pulls the image from the repository to the local environment

docker run combines docker pull and docker start which pulls the images if it not locally available and starts.

docker stop makes it possible to stop the images for changes or restart.

docker run -d runs the container in detached mode

docker run -d -p5000:7000 runs the container in detach mode binding port with host and container.

docker ps -a gives info on all containers currently running.

docker images shows all the images you have locally.

docker ps shows a list of containers that can be logged with the docker log ID.

Cc: NetNinja and Nana for your videos and continuous impact on the community.